Data literacy with Python

Real World Datasets

There are many sources of data on the internet. Governments make public sector data available for activities such as Hackathons, allowing diverse groups of people to provide innovative solutions for communities.

For example, the West Australian State Government has the site Data WA, with over 2000 datasets.

The Australian Government has the site data.gov.au, with over 100,000 datasets.

Another good source is the Australian Bureau of Statistics (ABS)

Dataset 1 - Accessing and Cleaning

Our first dataset is Australian Taxation Statistics 2021-2022, in particular Table 6B which gives summary tax details for individual returns by postcode.

This dataset can be accessed directly from Python, it is an Excel xlsx file. It is published under a Creative Commons Attribution 2.5 Australia licence so is suitable for use here.

Previewing this file, the data really starts in row 2 with the column names. Let’s say we are only interested in some data, somewhat arbitrarily Taxable Income and Private Health cover status related data across States and Postcodes. So we only need to import Columns 1, 3, 4, 6 and 155 (in Python these are referenced as 0, 2, 3, 5 and 154 as the first column is numbered ‘0’).

tax2022_url = 'https://data.gov.au/data/dataset/4be150cc-8f84-46b8-8c61-55ff1d48a700/resource/43d41d1d-4e39-45df-b693-a06255779cff/download/ts22individual06taxablestatusstatesa4postcode.xlsx'

tax2022_raw = pd.read_excel(tax2022_url, sheet_name='Table 6B', skiprows=1, usecols=[0,2,3,5,154])

tax2022_raw["Postcode"] = tax2022_raw["Postcode"].astype('str').str.pad(width=4, side='left', fillchar='0')

tax2022_raw.head(5)

| State/ Territory1 | Postcode | Individuals\nno. | Taxable income or loss4\n$ | People with private health insurance\nno. | |

|---|---|---|---|---|---|

| 0 | ACT | 2600 | 5951 | 791214764 | 4841 |

| 1 | ACT | 2601 | 3614 | 265604097 | 1965 |

| 2 | ACT | 2602 | 23085 | 2026942835 | 15791 |

| 3 | ACT | 2603 | 7558 | 1055186744 | 5926 |

| 4 | ACT | 2604 | 9137 | 953261746 | 6649 |

Python/Pandas provides the functionality to change the column names to something easier to work with.

tax2022_raw.columns = ['State', 'Postcode', 'Returns', 'TaxableIncome_dollars', 'PrivateHealth_returns']

tax2022_raw.head(5)

| State | Postcode | Returns | TaxableIncome_dollars | PrivateHealth_returns | |

|---|---|---|---|---|---|

| 0 | ACT | 2600 | 5951 | 791214764 | 4841 |

| 1 | ACT | 2601 | 3614 | 265604097 | 1965 |

| 2 | ACT | 2602 | 23085 | 2026942835 | 15791 |

| 3 | ACT | 2603 | 7558 | 1055186744 | 5926 |

| 4 | ACT | 2604 | 9137 | 953261746 | 6649 |

Using only code, Python/Pandas provides the ability to get structure information (info()) and summary descriptions (describe()) of the data.

- Structure/Info

tax2022_raw.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 2639 entries, 0 to 2638

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 State 2639 non-null object

1 Postcode 2639 non-null object

2 Returns 2639 non-null int64

3 TaxableIncome_dollars 2639 non-null int64

4 PrivateHealth_returns 2639 non-null int64

dtypes: int64(3), object(2)

memory usage: 103.2+ KB

- Summary/Describe

tax2022_raw.describe()

| Returns | TaxableIncome_dollars | PrivateHealth_returns | |

|---|---|---|---|

| count | 2639.000000 | 2.639000e+03 | 2639.000000 |

| mean | 5886.849185 | 4.257770e+08 | 3312.040925 |

| std | 8672.895406 | 6.176270e+08 | 4695.577501 |

| min | 51.000000 | 1.368192e+06 | 12.000000 |

| 25% | 412.500000 | 2.389290e+07 | 212.000000 |

| 50% | 2103.000000 | 1.300142e+08 | 1079.000000 |

| 75% | 8608.500000 | 6.246685e+08 | 5010.500000 |

| max | 123657.000000 | 5.074196e+09 | 36635.000000 |

To filter rows, columns, or any combination of these, there are multiple ways of achieving this in Python/Pandas. Python uses 0-based indexing, the first index of a list is 0.

Filter by

- Columns/Fields, either by column number

tax2022_raw.iloc[:, 1].head(3) # 2nd column

0 2600

1 2601

2 2602

Name: Postcode, dtype: object

- or by Column ‘Name’ (with tail instead of head, to access the last rows of the data frame),

tax2022_raw.loc[:, "Postcode"].tail(4)

2635 6951

2636 6959

2637 6985

2638 WA other

Name: Postcode, dtype: object

- to retrieve the unique values from a column,

tax2022_raw["State"].unique()

array(['ACT', 'NSW', 'NT', 'Overseas', 'QLD', 'SA', 'TAS', 'VIC', 'WA'],

dtype=object)

- Rows/Records, either by row number, returned a row as a series,

tax2022_raw.iloc[1] #

State ACT

Postcode 2601

Returns 3614

TaxableIncome_dollars 265604097

PrivateHealth_returns 1965

Name: 1, dtype: object

- Rows/Records, either by row number, returned a row as a data frame,

tax2022_raw.iloc[[1]] # return a row as a data frame

| State | Postcode | Returns | TaxableIncome_dollars | PrivateHealth_returns | |

|---|---|---|---|---|---|

| 1 | ACT | 2601 | 3614 | 265604097 | 1965 |

- by rows which have particular values (or categories) in a column,

tax2022_raw[tax2022_raw["State"].isin(["Unknown","Overseas"])] # returns rows where State **is** Unknown or Overseas

| State | Postcode | Returns | TaxableIncome_dollars | PrivateHealth_returns | |

|---|---|---|---|---|---|

| 698 | Overseas | Overseas | 123657 | 3888397917 | 17557 |

- by Rows and Columns, either by row and column number,

tax2022_raw.iloc[1,1] # 2nd row, 2nd column

'2601'

- or by the row with the maximum value of a column, and returning only three columns of the dataframe.

tax2022_raw.loc[tax2022_raw["PrivateHealth_returns"] == tax2022_raw["PrivateHealth_returns"].max(), ["State","Postcode","PrivateHealth_returns"]]

| State | Postcode | PrivateHealth_returns | |

|---|---|---|---|

| 852 | QLD | 4350 | 36635 |

There are two interesting aspects of the data above which demonstrate the need to ‘clean’ data.

-

The tail command above reveals that the data is not exclusively ‘per postcode’; if the number of returns was small those postcodes are grouped into an ‘Other’ row. We will leave this for the moment and observe the impact later in this workflow.

-

There are some State values for ‘Overseas’ and ‘Unknown’ which are not of interest. In Python we could create a new dataframe without these. We can also check the new dataframe with the filter to check that it returns no matching rows.

# Create a new, intermediate table without these rows

tax2022_raw_aus = tax2022_raw[~tax2022_raw["State"].isin(["Unknown","Overseas"])]

# Check the rows are now excluded

tax2022_raw_aus[tax2022_raw_aus["State"].isin(["Unknown","Overseas"])].info()

<class 'pandas.core.frame.DataFrame'>

Index: 0 entries

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 State 0 non-null object

1 Postcode 0 non-null object

2 Returns 0 non-null int64

3 TaxableIncome_dollars 0 non-null int64

4 PrivateHealth_returns 0 non-null int64

dtypes: int64(3), object(2)

memory usage: 0.0+ bytes

Method Chaining

In cleaning and subsetting the data above we now have two data frames, namely tax2022_raw and tax2022_raw_aus. In trying to keep the Python commands relatively short and understandable we can end up with a lot of intermediate or temporary data frames, which can be difficult to keep track of. Another alternative is to use method chaining, where one method is called on another method, and so on, forming a ‘chain’ of methods (or actions) performed on the data. Effectively the output of one command is ‘piped’ into the next command and so on. This strikes a great balance between command readability and minimising intermediate data frames.

Method chaining is available for a number of core and add-on packages, including Pandas. We have already been using method chaining in much of the above!

For example, to achieve removing the same rows in the previous step, we can apply the query() method directly to the tax2022_raw data and compare the total rows with the previous info() method. Methods are chained using the ‘dot’ operator/notation.

Note that the head command is part of method chaining too.

Note also that for the remainder of the workshop we have shown each new method on a new line, which requires enclosing the whole statement in outer brackets (). The indents here are not critical, as distinct from other commands in Python such as loops or if-the-else, where indentation is critical.

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.info())

<class 'pandas.core.frame.DataFrame'>

Index: 2638 entries, 0 to 2638

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 State 2638 non-null object

1 Postcode 2638 non-null object

2 Returns 2638 non-null int64

3 TaxableIncome_dollars 2638 non-null int64

4 PrivateHealth_returns 2638 non-null int64

dtypes: int64(3), object(2)

memory usage: 123.7+ KB

Key Learning

Key Learning #1 - Data is data, there is no need to constantly have a dedicated view for the raw, original data. These tools allow us to view it in any form needed so as to inform our analysis and visualisation.

Key Learning #2 - Cleaning the data involves investigating the original data, leaving it as it is and writing code to create a workable dataset, having removed unnecessary or incorrect data.

Key Learning #3 - Using method chaining makes it simpler to prepare, read and modify code and eliminates the need for the clutter of many intermediate or temporary data frames.

Further Learning

Further Learning #1 - The datasets in this workshop are quite ‘clean’ and complete. Then there are datasets which are incomplete with data that is not a number or NAN - for another time.

Dataset 1 - Analysis

As an example, let’s perform some aggregate functions, such as sums or totals of dollars and returns for each State. Whereas query() acts on rows, drop() can act on columns and rows (axis=). We will aggregate by State, so we remove the Postcode column using drop(). We could select the rows we require as per above, however here it is more convenient to drop the few column(s) we don’t require.

To calculate the sums we can pass the data to a groupby() command to group by State, and then pass that result to a sum() command to perform the aggregation on all columns.

# Totals by State

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop("Postcode", axis=1)

.groupby("State")

.sum())

| Returns | TaxableIncome_dollars | PrivateHealth_returns | |

|---|---|---|---|

| State | |||

| ACT | 301650 | 25305959656 | 199369 |

| NSW | 4788259 | 366314190746 | 2832812 |

| NT | 132529 | 9640785020 | 66821 |

| QLD | 3156186 | 216127627636 | 1607801 |

| SA | 1065717 | 68138353958 | 638572 |

| TAS | 329221 | 20005188929 | 171046 |

| VIC | 3958298 | 284549647726 | 2054944 |

| WA | 1679878 | 129655460938 | 1151554 |

To calculate the sums for the whole of Australia for 2021-2022, let’s filter the State column too.

# Totals for Australia

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop(["Postcode","State"], axis=1)

.sum())

Returns 15411738

TaxableIncome_dollars 1119737214609

PrivateHealth_returns 8722919

dtype: int64

As a further example, let’s calculate the

- Taxable Income per return per State, and

- Percent of Private Health Insurance per State

Here we are creating two new calculated columns based on the data for each row, and so will use assign() to create the new columns and mean() for the summary.

# Means per State

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop("Postcode", axis=1)

.assign(TaxableIncome_dollarspr = lambda x: x.TaxableIncome_dollars/x.Returns)

.assign(PrivateHealth_percentpp = lambda x: x.PrivateHealth_returns/x.Returns*100)

.loc[:, ["State", "TaxableIncome_dollarspr", "PrivateHealth_percentpp"]]

.groupby("State")

.mean())

| TaxableIncome_dollarspr | PrivateHealth_percentpp | |

|---|---|---|

| State | ||

| ACT | 86592.310650 | 64.491001 |

| NSW | 71809.852336 | 60.113227 |

| NT | 72862.817283 | 49.597764 |

| QLD | 63037.803493 | 50.046355 |

| SA | 60967.223483 | 58.458858 |

| TAS | 58154.107697 | 50.852413 |

| VIC | 66824.784228 | 49.701952 |

| WA | 73781.555452 | 66.536369 |

Wide and Narrow Formats

The raw data is in summary form, or wide form. Easily read by people, not ideal for all the processing options available for machines eg AI.

Sometimes tasks in Python are more easily achieved with the data in narrow or long format, where each row essentially only has one item of data.

Fortunately Python/Pandas have tools which allow for easily swapping between formats, namely melt and pivot.

What is important is knowing which columns are to be kept, often called identifier variables ( Postcode and State ), and which columns are to be pivoted, often called measured variables ( Returns, Taxable Income and Private Health status ).

Let’s create a temporary dataframe tax2022_raw_long here just to show the effect of moving from wide to narrow/long formats and back again.

From Wide to Narrow/Long

tax2022_raw_long = (tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.melt(id_vars=["State","Postcode"],var_name="item"))

tax2022_raw_long.head(5)

| State | Postcode | item | value | |

|---|---|---|---|---|

| 0 | ACT | 2600 | Returns | 5951 |

| 1 | ACT | 2601 | Returns | 3614 |

| 2 | ACT | 2602 | Returns | 23085 |

| 3 | ACT | 2603 | Returns | 7558 |

| 4 | ACT | 2604 | Returns | 9137 |

and then back from Narrow/Long to Wide

(tax2022_raw_long.pivot(index=["State","Postcode"],columns="item",values="value")

.reset_index()

.head(5))

| item | State | Postcode | PrivateHealth_returns | Returns | TaxableIncome_dollars |

|---|---|---|---|---|---|

| 0 | ACT | 2600 | 4841 | 5951 | 791214764 |

| 1 | ACT | 2601 | 1965 | 3614 | 265604097 |

| 2 | ACT | 2602 | 15791 | 23085 | 2026942835 |

| 3 | ACT | 2603 | 5926 | 7558 | 1055186744 |

| 4 | ACT | 2604 | 6649 | 9137 | 953261746 |

Visualisation in Python

Python also has functions to visualise data. One common package used for visualisations is matplotlib, which along with Pandas can create visualisations with a minimal effort.

The code defines the data to be used, and then we use code to generate the graphs and assign values to the different aspects of the graphs. Code generated visualisation can be very efficient compared to GUI based platforms.

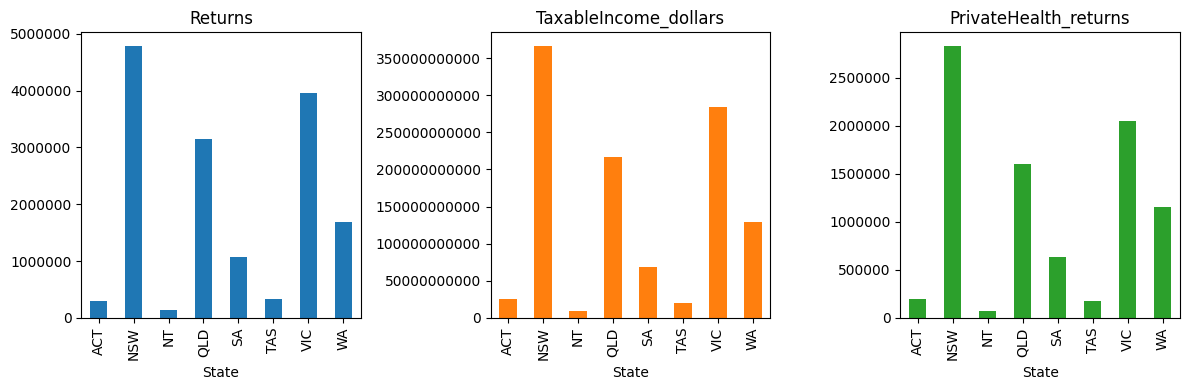

For example, to quickly visualise (without much styling!) the summed totals of the raw data per State

- we take the code from earlier (#Totals by State),

- and simply use .plot.bar to create a barchart. Using subplots shows the different variables in separate subplots or facets, each with independent y axis ranges.

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop("Postcode", axis=1)

.groupby("State")

.sum()

.reset_index()

.plot.bar(x="State",subplots=True, figsize=(12, 4), layout=(1,3), legend=False))

plt.tight_layout()

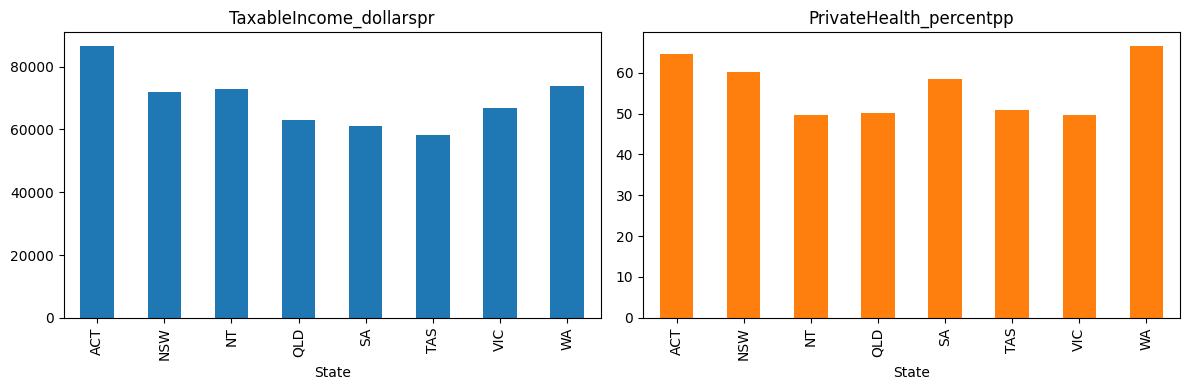

To quickly visualise the mean values per state

- we take the code from earlier (#Means per State),

- and similarly use .plot.bar to create a barchart.

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop("Postcode", axis=1)

.assign(TaxableIncome_dollarspr = lambda x: x.TaxableIncome_dollars/x.Returns)

.assign(PrivateHealth_percentpp = lambda x: x.PrivateHealth_returns/x.Returns*100)

.loc[:, ["State", "TaxableIncome_dollarspr", "PrivateHealth_percentpp"]]

.groupby("State")

.mean()

.reset_index()

.plot.bar(x="State",subplots=True, figsize=(12, 4), layout=(1,2), legend=False))

plt.tight_layout()

Note: These plots are images; they can become blurry when enlarged and are not responsive on different devices such as mobiles or tablets. Other visualisation tools, such as Bokeh (presented later) are more versatile as they effectively recreate the plot to suit each device.

Automation

When the source data changes, for example more data samples are collected or updated, using code to manipulate the data brings a massive advantage - automation. The same code can be re-executed on the new data for updated analysis and visualisations.

As an example, here is the code used to sum the three Taxation parameters for Australia for 2021-2022, re-executed for the 2020-2021 dataset, also published under Creative Commons Attribution 2.5 Australia. The code required a small tweak, the columns are in a different order compared to 2021-2022.

Compare the results for 2020/21 and 2021/22.

- 2020/2021

tax2021_url = 'https://data.gov.au/data/dataset/07b51b39-254a-4177-8b4c-497f17eddb80/resource/fa05bac8-079d-4eba-bd4d-779466e45f02/download/ts21individual06taxablestatusstateterritorypostcode.xlsx'

tax2021_raw = pd.read_excel(tax2021_url, sheet_name='Table 6B', skiprows=1, usecols=[0,1,2,4,153])

tax2021_raw.columns = ['State', 'Postcode', 'Returns', 'TaxableIncome_dollars', 'PrivateHealth_returns']

(tax2021_raw.query('~State.isin(["Unknown","Overseas"])')

.drop(["Postcode","State"], axis=1)

.assign(TaxableIncome_dollarspr = lambda x: x.TaxableIncome_dollars/x.Returns)

.assign(PrivateHealth_percentpp = lambda x: x.PrivateHealth_returns/x.Returns*100)

.loc[:, ["TaxableIncome_dollarspr", "PrivateHealth_percentpp"]]

.mean())

TaxableIncome_dollarspr 62881.598176

PrivateHealth_percentpp 55.713724

dtype: float64

- 2021/22

(tax2022_raw.query('~State.isin(["Unknown","Overseas"])')

.drop(["Postcode","State"], axis=1)

.assign(TaxableIncome_dollarspr = lambda x: x.TaxableIncome_dollars/x.Returns)

.assign(PrivateHealth_percentpp = lambda x: x.PrivateHealth_returns/x.Returns*100)

.loc[:, ["TaxableIncome_dollarspr", "PrivateHealth_percentpp"]]

.mean())

TaxableIncome_dollarspr 67519.740410

PrivateHealth_percentpp 55.812433

dtype: float64

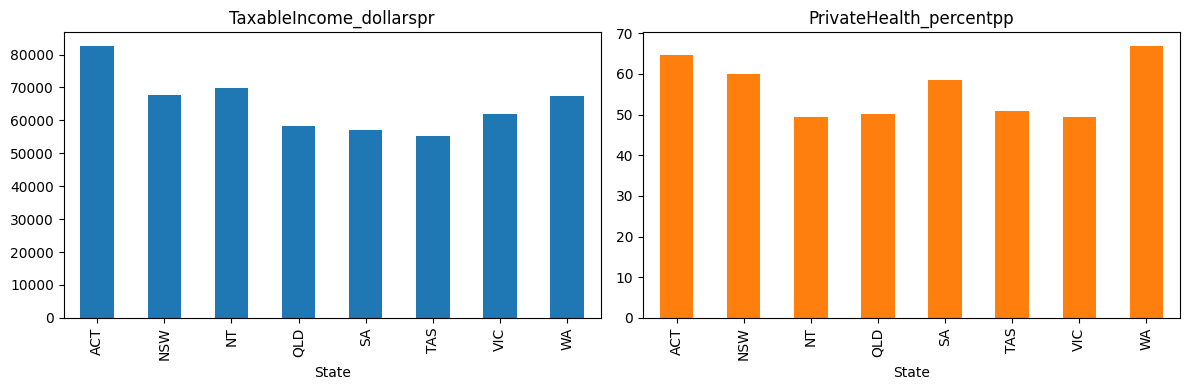

Also compare the plot of mean values for 2020/21.

(tax2021_raw.query('~State.isin(["Unknown","Overseas"])')

.drop("Postcode", axis=1)

.assign(TaxableIncome_dollarspr = lambda x: x.TaxableIncome_dollars/x.Returns)

.assign(PrivateHealth_percentpp = lambda x: x.PrivateHealth_returns/x.Returns*100)

.loc[:, ["State", "TaxableIncome_dollarspr", "PrivateHealth_percentpp"]]

.groupby("State")

.mean()

.reset_index()

.plot.bar(x="State",subplots=True, figsize=(12, 4), layout=(1,2), legend=False))

plt.tight_layout()

Key Learning

Key Learning #4 - Data frame structures are easily transformed in Python, transforming to whatever form is convenient for a particular purpose.

Key Learning #5 - Using code to perform analysis and generate graphs and visualisations saves a lot of time and finessing, particularly when tasks need to be repeated regularly and often.

Further Learning

Further Learning #2 - The above visualisations were generated quickly, without too much concern for formatting. Every aspect of the graphs above can be controlled to give beautiful and purposeful visualisations.